A few comments:

I, too, have been testing Grant's OLMAR algorithm. The thing I like most about it is that it is ...as Nassim Taleb would say, “anti-fragile” or "convex." Antifragility is a convex response to a stressor, leading to a positive sensitivity to volatility. In plain English, the market crashes but your strategy makes money. Simple graphic explanation of convexity at:

http://imgur.com/rRb7c23

In the backtest I posted, look at the returns between the peak and trough of the Oct 2007 - Mar 2009 market crash - while simply letting the algo chug along with only SPY and IEF to choose from. While the broad market dropped 53% during that period, OLMAR made 20.6%. That’s convex.

To trade this live, make one decision: If IEF is outperforming TLT, trade SPY against IEF, and vice versa. As a bonus, the drawdowns for OLMAR are sufficiently comfortable to even use SSO in place of SPY.

Frankly, Grant, your OLMAR code is, overall, the best performing code I’ve found since joining this community. The backtest I've attached is your work; I just gave the algo SPY and IEF to work with and used Interactive Brokers' low per share commission. IB's fees are low enough and this performance is good enough ...to make the daily trading frequency moot. In every one of my tests, the fees are far less than the average financial advisor charges.

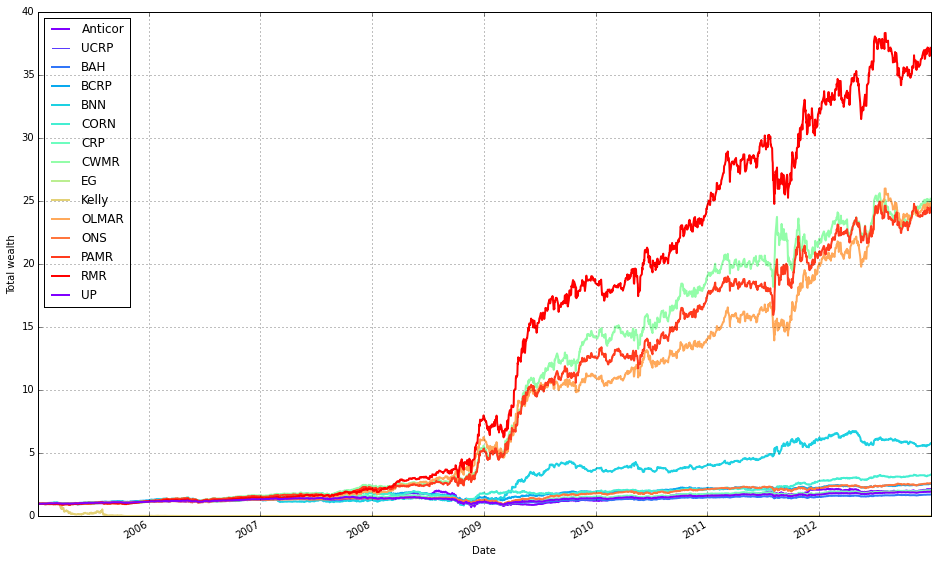

Paul, what a great comparison. Nice work. One caveat: although RMR appears the clear winner in your graphical comparison, RMR severely underperforms OLMAR during a crash. Using the same peak-trough dates for the S&P crash and just giving RMR - SPY and IEF - to work with, RMR produces a 17% loss compared with OLMAR’s 20% gain. Nothing, repeat nothing, makes it easier to adhere to a strategy than a minimal drawdown.

I've also tested OLMAR using a "motif" or "thematic" investing approach. Take for example this situation: hackers start hacking everybody (Sony, JPM, etc.) - you identify the top five cybersecurity stocks and turn over the trading to OLMAR. From August 2014 to August 2015, OLMAR doubles the returns for what motifinvesting.com/ has posted for their cybersecurity "motif" product. This algo is robust, especially if you fundamentally believe that Kelly investing is a superior strategy.

Sincerest gratitude to both of you - Grant and Paul - for both your intellectual gifts ...and for so willingly sharing such precious work.

ADDENDUM

Wondering what OLMAR means in real life …real money terms? Look at the strategy’s ROI, year by year, from 2008 to the end of 2014.

Table: SPX vs OLMAR

So if you invest $100K, you could safely draw $25,000 per year from the strategy. Practically speaking, you live trade OLMAR on IB and set up an automated electronic (ACH) transfer to your checking account. Each month you transfer $25K/12 months. Modify the withdrawal or the investment to suit your risk budget.

Here are some brief definitions for the strategies Paul has compared:

Robust Median Reversion (RMR): exploits the reversion phenomenon by robust L1-median estimator.

Constant Rebalanced Portfolios (CRP): follows Kelly’s idea of keeping a fixed fraction for each asset on all periods.

The best possible CRP strategy in hindsight is often known as Best Constant Rebalanced Portfolios (BCRP), which is the optimal strategy if the market is i.i.d. (marked by noise which is independent and identically distributed (against time)).

The Universal Portfolios (UP): describes a portfolio whose historical performance is the weighted average of all CRPs.

Exponential Gradient (EG) strategy: maximizes the expected log portfolio return estimated by last price relatives, and minimizes the deviation from last portfolio.

Anti-correlation (Anticor): bets on the consistency of positive lagged cross-correlation and negative auto-correlation.

Passive Aggressive Mean Reversion (PAMR): iteratively chooses portfolio minimizing the expected return based on last price relatives.

Confidence Weighted Mean Reversion (CWMR): exploits the mean reversion property and the variance information of portfolio.